Environments – Data Sources and Ingestion

There are three Azure Databricks environments, as listed in Table 3.16.

TABLE 3.16 Azure Databricks environments

| Environment | Description |

| SQL | A platform optimized for those who typically run SQL queries to create dashboards and explore data |

| Data Science & Engineering | Used for collaborative Big Data pipeline initiatives |

| Machine Learning | An end‐to‐end ML for modeling, experimenting, and serving |

The Data Science & Engineering environment is the one that is in focus, as it pertains most to the DP‐203 exam. Data engineers, machine learning engineers, and data scientists would collaborate, consume, and contribute to the data analytics solution here. Figure 3.21 shows some of the options you will find while working in the Data Science & Engineering environment. The following section provides some additional information about those features.

Create

This section provides features to create notebooks, tables, clusters, and jobs.

Notebook

In step 6 of Exercise 3.14 you created a notebook to run code to create a metastore and then query it (refer to Figure 3.67). A few concepts surrounding the Azure Databricks notebook are discussed in the following subsections.

ACCESS CONTROL

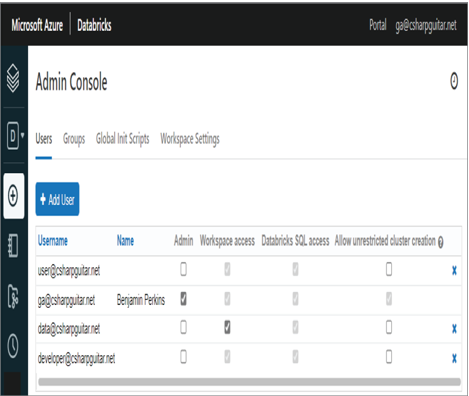

Access control is only available with the Azure Databricks Premium Plan; you may remember that the option from the Pricing Tier drop‐down. The option was Premium (+ Role‐based access control). By default, all users who have been granted access to the workspace can create and modify everything contained within it. This includes models, notebooks, folders, and experiments. You can view who has access to the workspace and their permissions via the Settings ➢ Admin Console ➢ Users page, which is discussed later but illustrated in Figure 3.68.

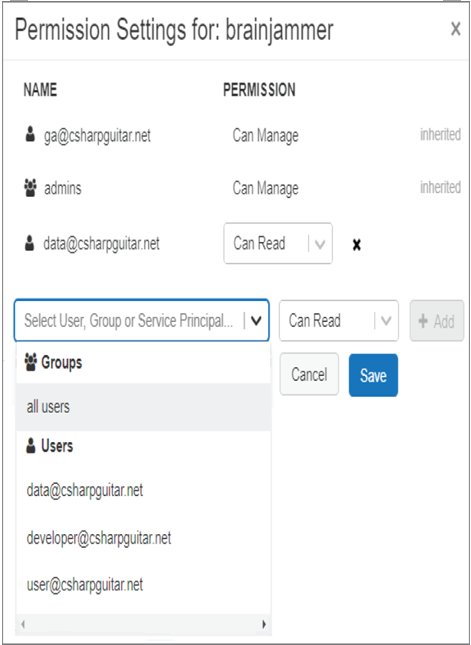

If you want to provide a specific user access to a notebook, click Workspace ➢ Users ➢ the account that created the notebook ➢ the drop‐down menu arrow next to the notebook name ➢ and then Permissions. As shown in Figure 3.69, you have the option to select the user and the permission for that specific notebook.

The permission options include Can Read, Can Run, Can Edit, and Can Manage. Adding permissions per user is a valid approach only for very small teams; if you have a larger team, consider using groups as the basis for assigning permissions.

FIGUER 3.68 Azure Databricks Settings Admins Console Users

FIGUER 3.69 Azure Databricks Workspace User notebook Permission