Job Topology – Data Sources and Ingestion

The word topology is common in IT. The concept is often experienced in the context of a network topology. It is simply the arrangement of interrelated parts that constitute the whole. The same definition can be applied to a job topology, which combines inputs, functions, queries, and outputs.

Inputs

There are two types of inputs: stream and reference. A stream input, which you created in Exercise 3.17, can be either blob/ADLS, an event hub, or an IoT hub. The configuration for each type is very similar and results in whatever is added to either of those ingestion products being processed by the Azure Stream Analytics job. A reference input can be either blob/ADLS or a SQL database. This kind of input is useful for job query scenarios that need reference data, for example, a dimensional or a temporal table. Consider the following query:

SELECT b.VALUE, b.READING_DATETIME, f.FREQUENCY FROM brainwaves b TIMESTAMP BY READING_DATETIME JOIN frequency fON b.FREQUENCY_ID = f.FREQUENCY_ID WHERE f.FREQUENCY_ID = 1

An input stream with the name of brainwaves is joined to a reference input named frequency. Note that part of the configuration of the reference input includes a SQL query to retrieve and store a local copy of that table, for example.

SELECT FREQUENCY_ID, FREQUENCY, ACTIVITY

FROM FREQUENCY

The values for b.VALUE and b.READING_DATETIME would be captured from the message stream sent via the event hub, whereas the value for f.FREQUENCY would be pulled from the reference input source table.

Functions

Chapter 2 introduced user‐defined functions (UDFs). Azure Stream Analytics supports UDFs, which enable you to extend existing capabilities through custom code. The following function types are currently supported:

- Azure ML Service

- Azure ML Studio

- JavaScript UDA

- JavaScript UDF

Consider that you have a JavaScript UDF you created via the Azure Portal IDE and which resembles the following code snippet:

function squareRoot(n) {return Math.sqrt(n);}

You can then reference the UDF via your input stream named brainwaves using the following query syntax:

SELECTSCENARIO,ELECTRODE,FREQUENCY,VALUE,UDF.squareRoot(VALUE) as VALUESQUAREROOT

INT OpowerBI FROM brainwaves

The result would be stored into the configured output location named, for example, powerBI.

Query

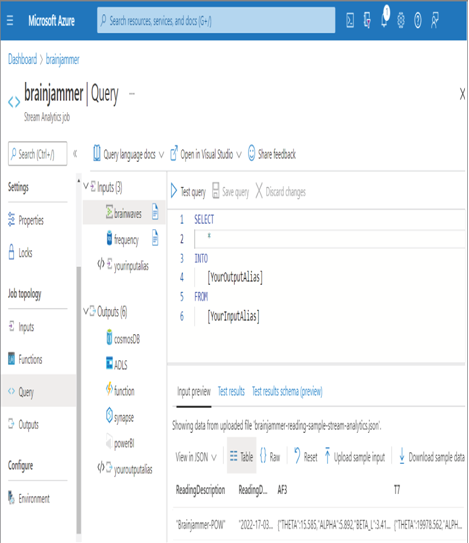

The query executed on each piece of streamed data into the Azure Stream Analytics input stream is the beginning of the magic. This is where you analyze the stream data and perform storage, transformation, computation, and/or alerting activities. Figure 3.79 shows an example of how the Query blade might look.

Azure Stream Analytics offers extensive support for queries. The available syntax is a subset of T‐SQL and is more than enough to meet even the most demanding requirements. If by chance it does not, you can implement a UDF to fill the gap. The querying capabilities are split into the following categories, most of which you will find familiar. All of the capabilities are not called out—only the ones that are most likely to be on the DP‐203 exam.

FIGUER 3.79 An Azure Stream Analytics job query